Diaphora, a program diffing plugin for IDA Pro

UPDATE: The plugin is now published in GitHub.

Some weeks ago I started developing a binary diffing plugin for IDA Pro (in IDA Python) like Zynamics BinDiff, DarunGrim or Turbo Diff. The reasons to create one more (open source) plugin for such task are various, but the following are the main ones:

- We need an Open Source plugin/tool that is updated, maintained and easy to modify or adapt.

- The plugin should do much more than what the current ones do. It must offer much more functionality than previously existing ones.

- The plugin should be as deeply integrated in IDA as possible (because 99% of serious researchers use IDA as the main tool).

- The plugin must not be subject to big corporation’s desires (i.e., Google).

The plugin or tool I have more used and the one I liked the most was Zynamics BinDiff. However, after Google bought the company, updates to it are either too slow or non existent (you can check this issue and, my favourite, this one, where Google people tells to actually patch the binary and that, may be, they can have a real fix for the next week). Also, nobody can be sure Google is not going to finally kill the product making it exclusively a private tool (i.e., only for Google) or simply killing it because they don’t want to support it for a reason (like it killed GoogleCode or other things before). Due to this reason, because I like no current open source plugins for bindiffing and, also, because they lack most of the features that, on my mind, a decent todays binary diffing tool should have, I decided to create one of mine: Diaphora.

NOTE: If you’re not interested in the non-technical aspect of this project, skip until the “Finding differences in new versions (Patch diffing)” section 😉

Introduction

Diaphora (διαφορά, in Greek “difference”) is a pure python plugin for IDA Pro to perform program comparison, what is often referred as “Binary Diffing”. This plugin is based on all the previous published research and work of Thomas Dullien (aka Halvar Flake) and Rolf Rolles as well as my own research and ideas on this subject. I always found that the bindiff tools did not import enough information from database to database, if at all. For example: I’m a heavy user of structures and enumerations in IDA and I always have to manually import them. This is tedious and I’m lazy. Another problem: sometimes, I perform malware analysis and I want to check the call graph of the malwares: only Zynamics BinDiff does so. Also, many times I need to match functions from x86, x86_64 and ARM binaries interchangeably. The Zynamics plugin works “great” over all, but can fail matching many functions because the compiler used is different, naturally. However, the Hex-Rays decompiler is not typically bothered by such changes and the pseudo-code is rather similar if not 100% equal. So, why not use also the decompiler? And this is (one of the many things) I do: I use both the AST the Hex-Rays decompiler offers and the pseudo-code. It allows me to perform, interchangeably, binary diffing with x86, x86_64 and ARM at levels that are ahead of the current binary diffing tools or plugins.

It isn’t 100% public yet because is in BETA stage, but will be published soon in, likely, GitHub. But, anyway, I think it’s enough talking about dates, what is and what is not, let’s see it in action 😉

Finding differences in new versions (Patch diffing) {.Heading_20_2}

In order to use Diaphora we need at least two binary files to compare. I will take as example 2 different versions of the “avast” binary from Avast for Linux x86_64. The files has the following hashes:

-

1.ed5bdbbfaf25b065cf9ee50730334998 avast

-

2.72a05d44b2ac3d7a1c9d2ab5939af782 avast-72a05d44b2ac3d7a1c9d2ab5939af782

The file “avast-

We only need to care about 2 things:

Field “Export current database to SQLite”. This is the path to the SQLite database that will be created with all the information extracted from the IDA database of this avast binary.

Field “Use the decompiler if available”. If the Hex-Rays decompiler is available and we want to use it, we will leave this check-box marked, otherwise un-check it.

After correctly selecting the appropriate values, press OK. It will start exporting all the data from the IDA database. When the export process finishes the message “Database exported.” will appear in the IDA’s Output Window. Now, close this database, save the changes and open the “avast” binary. Wait until the IDA’s auto-analysis finishes and, after it, run Diaphora like with the previous binary file. This time, we will select in the 2nd field, the one named “Open SQLite database to diff”, the path to the .sqlite file we just exported in the previous step, as shown in the next figure:

After this, press the OK button, as we will use the default options. It will first export the current IDA database to the SQLite format as understood by Diaphora and, then, right after finishing, compare both databases. It will show an IDA’s wait box dialog with the current heuristic being applied to match functions in both databases as shown in the next figure:

After a while a set of lists (choosers, in the HexRays workers language) will appear:

There is one more list that is not shown for this database, the one named “Unreliable matches”. This list holds all the matches that aren’t considered reliable. However, in the case of this binary with symbols, there isn’t even a single unreliable result. There are, however, unmatched functions in both the primary (the latest version) and the secondary database (the previous version):

The previous image shows the functions not matched in the secondary database, that is: the functions removed in the latest version. The second figure shows the functions not matched in the previous database, the new functions added:

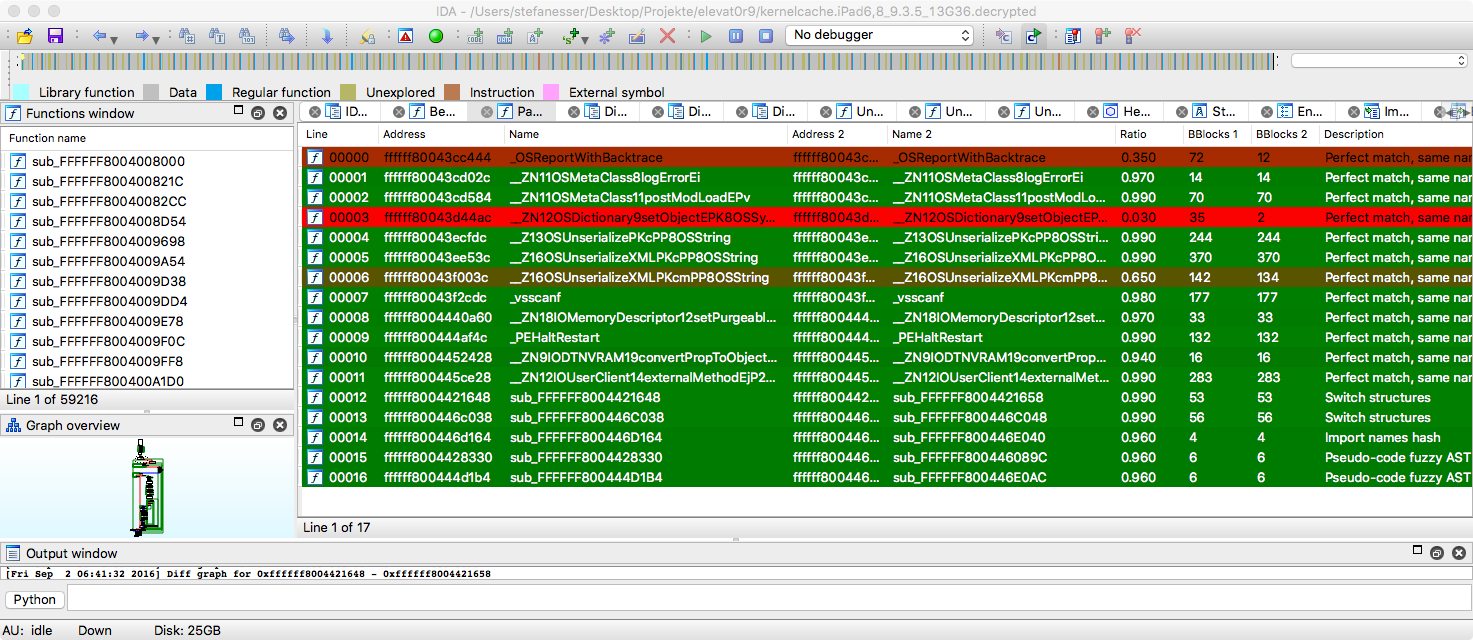

It seems they added various functions to check for SSE, AVX, etc… Intel instructions. Also, they added 2 new functions called handler and context. Let’s take a look now to the “Best matches” tab opened:

There are many functions in the “Best matches” tab, 2556 functions, and in the primary database there are 2659 functions. The results shown in the “Best matches” tab are these functions matched with some heuristic (like “100% equal”, where all attributes are equal, or “Equal pseudo-code”, where the pseudo-code generated by the decompiler is equal) that, apparently, doesn’t have any difference at all. If you’re diffing these binaries to find vulnerabilities fixed with a new patch, just skip this tab, you will be more interested in the “Partial matches” one 😉 In this tab we have many more results:

It shows the functions matched between both databases and, in the description field, it says which heuristic matched and the ratio of differences. If you’re looking for functions where a vulnerability was likely fixed, this is where you want to look at. It seems that the function “handle_scan_item”, for example, was heavily modified: the ratio is 0.49, so it means that more than the 49% of the function differs between both databases. Let’s see the differences: we can see then in an assembly graph, in plain assembly or we can diff pseudo-code too. Right click on the result and select “Diff assembly in a graph”, the following graph will appear:

The nodes in yellow colour, are these with only minor changes; pink ones, are these that are either new or heavily modified and the blank ones, the basic blocks that were not modified at all. Let’s diff now the assembly in plain text: go back to the “Partial matches” tab, right click on the function “handle_scan_item” and select “Diff assembly”:

It shows the differences, in plain assembly, that one would see by using a tool like the Unix command “diff”. We can also dif the pseudo-code; go back to the “Partial matches” tab, right click in the function and select “Graph pseudo-code”:

As we can see, it shows all the differences in the pseudo-code in a 2 sides diff, like with the assembly diff. After you know how the 3 different ways to see differences work, you can choose your favourite or use all of the 3 for each specific case. Indeed, I use the 3 depending on each kind of change I’m looking for.

Sometimes, you don’t need to care about small changes when diffing 2 databases. For example, you maybe finding just the new features added to this or that program instead of finding bugs fixed in a product. We will continue with the previous binaries for this example. Go to the tab “Partial matches” and find the functions “respond” and “scan_reponse”:

According to the ratios shown it seems these functions are almost equal with small changes. Let’s see the differences for the function “respond”: right click on the respond function and select “Diff pseudo-code”:

It seems that the only change in this function is, actually, the size of a stack variable and the given size to the “protocol_response” function call. If we’re looking for the new functionality added to the product, it can be irritating going through a big list of small changes (or at least it’s for me). We will re-diff both databases: run again Diaphora by either running diaphora.py or selecting Edit → Plugins → Diaphora – Export or diff and, in the dialog select this time “Relaxed calculations on difference ratios” as shown bellow:

Press OK and wait for it to finish. When it’s finished, go to the “Best matches” tab and find the “respond” or “scan_response” functions:

This time, as we can see, both functions appear in the “Best matches”, the list of functions that are considered equal, so you don’t need to go through a big list with small changes here and there: the “Partial matches” tab will show only functions with bigger changes, making it easier to discover the new functionalities added to the program.

One of the most common tasks in reverse engineering, at least from my experience, is porting symbols from previous versions of a program, library, etc… to the new version. It can be quite annoying having to port function names, enumerations, comments, structure definitions, etc… manually to new versions, specially when talking about big reverse engineering projects.

In the following example, we will import the symbols, structures, enumerations, comments, prototypes, function flags, IDA type libraries, etc… from one version full of symbols to another version with symbols stripped. We will use Busybox 1.21-1, compiled in Ubuntu Linux for x86_64. After downloading and compiling it, we will have 2 different binaries: “busybox” and “busybox_unstripped”. The later, is the version with full symbols while the former is the one typically used for distribution, with all the symbols stripped. Launch IDA and open, first, the “busybox_unstripped” binary containing full symbols. Let’s IDA finish the initial auto-analysis and, after this, run Diaphora by either running diaphora.py or selecting the menu Edit → Plugins → Diaphora – Export or diff. In the dialog just opened, press OK:

Wait until Diaphora finishes exporting to SQLite the current database. When it finishes, close the current IDA database and open the binary “busybox”, wait until IDA finishes the initial auto-analysis and, then, launch again Diaphora. In the next dialog select as the SQLite database to diff the previous one we just created, the one with all the symbols from the previous binary:

Press OK and wait until it finishes comparing both databases. After a while, it will show various tabs with all the unmatched functions in both databases, as well as the “Best”, “Partial” and “Unreliable” matches tabs.

As we can see, Diaphora did a decent work matching 796 functions labeled as “Best Matches” and 2296 labeled as “Partial matches”, a total of 3092 functions out of 3134. Let’s go to the “Best matches” tab. All the functions here are these that were matched with a high confidence ratio. Let’s say that we want to import all the symbols for the “Best matches”: right click on the list and select “Import all functions”. It will ask if we really want to do so: press YES. It will import all function names, comments, function prototypes, structures, enumerations and even IDA’s type libraries (TILs). When it’s done it will ask us if we want to relaunch again the diffing process:

After Diaphora imports symbols it will also update the database with the exported data from the primary database and, as so, with the new information it may be possible to match new functions not discovered before. In this case we will just say “NO” to this dialog. Now, go to the “Partial matches” tab. In this list we have some matches that doesn’t look like good ones:

As we can see, the ratios are pretty low: from 0.00 to 0.14. Let’s diff the graphs of the “make_device” function (matched with the “same name” heuristic):

It doesn’t look like a good match. And, if it’s, it’s rather big to verify yet. Let’s delete this result: go back to the “Partial matches” tab, select the “make_device” match and, simply, press “DEL”. It will just remove this result. Now, do the same for the next results with 0.00 confidence ratio. OK, bad results removed. Now, it’s time to import all the partial matches: right click in the list and select “Import all data for sub_* functions”. It will import everything for functions that are IDA’s auto-named, these that start with the “sub_” prefix but will not touch any function with a non IDA auto-generated name. It will ask us for confirmation:

Press “Yes”, and wait until it exports everything and updates the primary database. And, that’s all! We just imported everything from function names, comments or prototypes to structures and enumerations and even IDA’s type libraries into the new database and we can just continue with our work with the new database and with all the data imported from the database we used to work on before.

Diaphora uses multiple heuristics to find matches between different functions. Other competing tools like DarunGrim or TurboDiff implements one, two or a handful of heuristics, with the only exception of Zynamics BinDiff, which implements a lot of heuristics. However, Diaphora is the only one implementing heuristics based on the Hex-Rays decompiler (if it’s available). The following list explains the whole current list of heuristics implemented in Diaphora as of the Release Candidate 1:

The very first try is to find if everything in both databases, even the primary key values are equals. If so, the databases are considered 100% equals and nothing else is done.

Equal pseudo-code. The pseudo-code generated by the Hex-Rays decompiler are equals. It can match code from x86, x86_64 and ARM interchangeably.

Equal assembly. The assembly of both functions is exactly equal.

Bytes hash and names. The first byte of each assembly instruction is equal and also the referenced true names, not IDA’s auto-generated ones, have the same names.

Same address, nodes, edges and mnemonics. The number of basic blocks, their addresses, the number of edges and the mnemonics in both databases are equal

Same name. The mangled or demangled name is the same in both functions.

Same address, nodes, edges and primes (re-ordered instructions). The function has the same address, number of basic blocks, edges and the prime corresponding to the cyclomatic complexity are equal. It typically matches functions with re-ordered instructions.

Import names hash. The functions called from both functions are the same, matched by the demangled names.

Nodes, edges, complexity, mnemonics, names, prototype, in-degree and out-degree. The number of basic blocks, mnemonics, names, the function’s prototype the in-degree (calls to the function) and out-degree (calls performed to other functions) is the same.

Nodes, edges, complexity, mnemonics, names and prototype. The number of basic blocks, edges, the cyclomatic complexity, the mnemonics, the true names used in the function and even the prototype of the function (stripping the function name) are the same.

Mnemonics and names. The functions have the same mnemonics and the same true names used in the function. It’s done for functions with the same number of instructions.

Small names difference. At least 50% of the true names used in both functions are the same.

Pseudo-code fuzzy hash. It checks the normal fuzzy hash (calculated with the DeepToad’s library kfuzzy.py) for both functions.

Pseudo-code fuzzy hashes. It checks all the 3 fuzzy hashes (calculated with the DeepToad’s library kfuzzy.py) for both functions. This is considered a slow heuristic.

Similar pseudo-code. The pseudo-code generated by the Hex-Rays decompiler is similar with a confidence ratio bigger or equal to 0.729. This is considered a slow heuristic.

Pseudo-code fuzzy AST hash. The fuzzy hash calculated via SPP (small-primes-product) from the AST of the Hex-Rays decompiled function is the same for both functions. It typically catches C constructions that are re-ordered, not just re-ordered assembly instructions.

Partial pseudo-code fuzzy hash. At least the first 16 bytes of the fuzzy hash (calculated with the DeepToad’s library kfuzzy.py) for both functions matches. This is considered a slow heuristic.

Same high complexity, prototype and names. The cyclomatic complexity is at least 20, and the prototype and the true names used in the function are the same for both databases.

Same high complexity and names. Same as before but ignoring the function’s prototype.

Bytes hash. The first byte of each instruction is the same for both functions. This is considered a slow heuristic.

Nodes, edges, complexity and mnemonics. The number of basic blocks, relations, the cyclomatic complexity (naturally) and the mnemonics are the same. It can match functions too similar that actually perform opposite operations (like add_XXX and sub_XXX). Besides, this is considered a slow heuristic.

Nodes, edges, complexity and prototype. Same as before but the mnemonics are ignored and only the true names used in both functions are considered. This is considered a slow heuristic.

Nodes, edges, complexity, in-degree and out-degree. The number of basic blocks, edges, cyclomatic complexity (naturally), the number of functions calling it and the number of functions called from both functions are the same. This is considered a slow heuristic.

Nodes, edges and complexity. Same number of nodes, edges and, naturally, cyclomatic complexity values. This is considered a slow heuristic.

Similar pseudo-code. The pseudo-codes are considered similar with a confidence’s ratio of 0.58 or less. This is considered a slow heuristic.

Same high complexity. Both functions has the same high cyclomatic complexity, being it at least 50. This is considered a slow heuristic.

Similar small pseudo-code. The pseudo-code generated by the Hex-Rays decompiler is less or equal to 5 lines and is the same for both functions. It matches too many things and the calculated confidence’s ratio is typically bad.

Small pseudo-code fuzzy AST hash. Same as “Pseudo-code fuzzy AST hash” but applied to functions with less or equal to 5 lines of pseudo-code. Like the previous heuristic, it matches too many things and the calculated confidence’s ratio is typically bad.

Similar small pseudo-code. Even worst than “Similar small pseudo-code”, as it tries to match similar functions with 5 or less lines of pseudo-code, matching almost anything and getting confidence’s ratios of 0.25 being lucky.

Equal small pseudo-code. Even worst than before, as it matches functions with the same pseudo-code being 5 or less lines of code long. Typically, you can get 2 or 3 results, that are, actually, wrong.

Same low complexity, prototype and names. The prototype of the functions, the true names used in the functions and its cyclomatic complexity, being it less than 20, is the same. It worked for me once, I think.

Same low complexity and names. The cyclomatic complexity, being it less than 20, and the true names used in the function are the same. It typically matches functions already matched by other heuristics, so it’s usefulness is really limited.